Childcare Documentation Diagnostics

You may have noticed a new diagnostic tool that has recently been added to EarlyWorks: Programming Quality Indicators.

What are these programming quality indicators, and what do they indicate?

The purpose of the Program Quality Indicators is to provide the leadership team with an indication of the extent to which educators are engaging with EarlyWorks’ programming tools. In other words, is your service making the most out of what EarlyWorks has to offer? And, how prepared is your service for Ratings and Assessment of Quality Area 1?

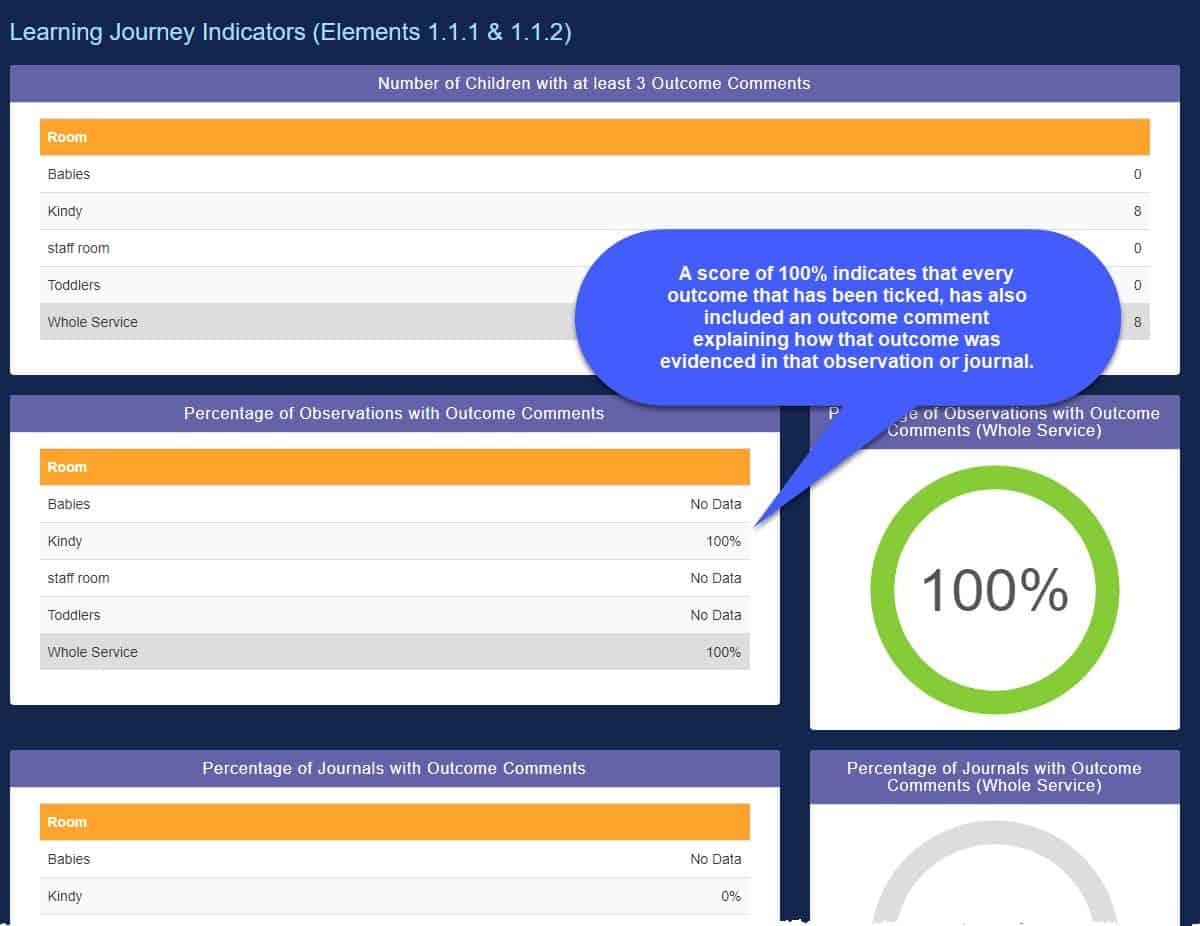

Learning Journey Indicators

Using the Learning Journey Indicators, the leadership team can see whether educators are just ticking outcome boxes, or actually determining the extent to which children are progressing towards and achieving outcomes.

According to The Early Years Learning Framework for Australia (p 17) the outcomes are ‘key reference points against which children’s progress can be identified, documented and communicated to families, other early childhood professionals and educators in schools.’ In other words, it is the comments that bring the outcomes to life, by explaining how the outcome was evidenced by that child on that day.

It is the outcome comments that will show, over time, the distance travelled by each child, as they become more sophisticated in the ways they evidence the outcomes. A simple tick of an outcome cannot provide this kind of summative assessment.

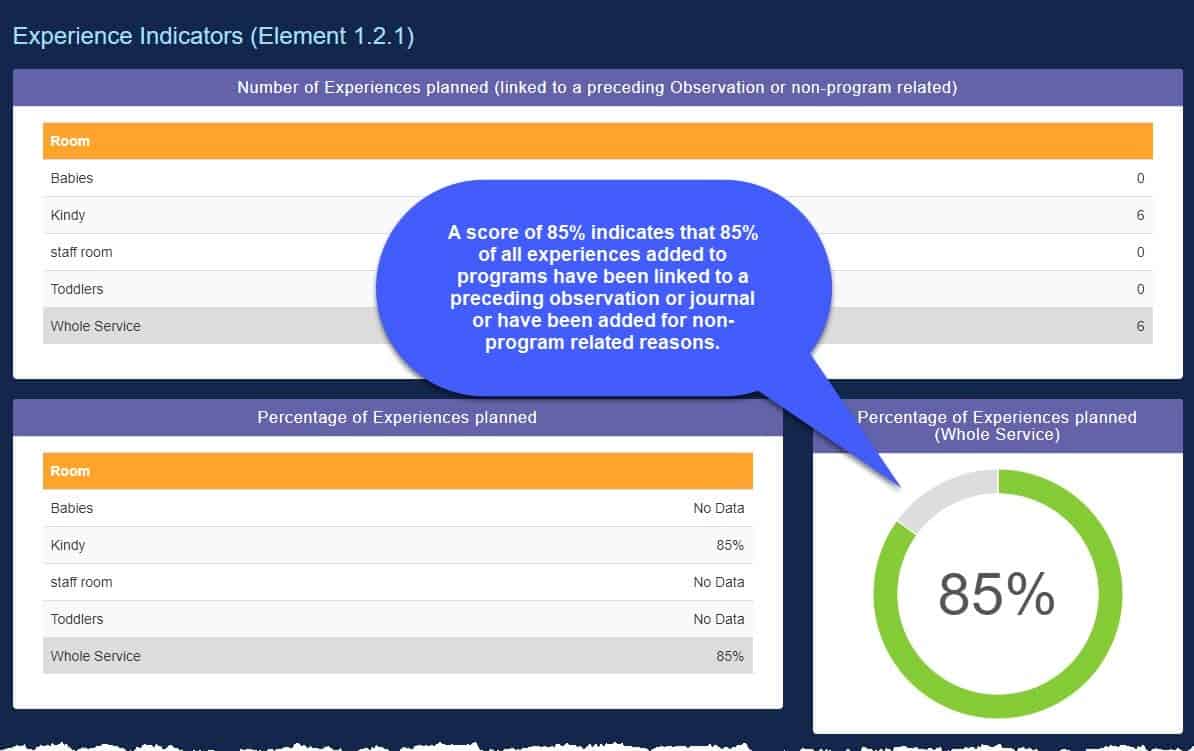

Experience Indicators

The purpose of the Experience Indicators is to ensure educators are clear about why they are adding experiences or activities to the program.

The Experience Indicators show the number of experiences that have been linked to preceding observations or have been added for non-program related reasons.

Sometimes educators may link an experience back to multiple observations. For example, an experience where children will be planting out seedlings might have multiple intended outcomes, that link back to multiple observations: counting, cooperation, caring for the environment, participating in social groups, fairness, language development…

Sometimes educators will add experiences to the program for non-program related reasons: intentional teaching, family input, community input, or because of a child initiated or educator initiated spontaneous activity.

Basically the Experience Indicators will show the extent to which educators ‘are deliberate, purposeful, and thoughtful in their decisions and actions’ (1.2.1), and child focused in their planning, ensuring ‘each child’s current knowledge, strengths, ideas, culture, abilities and interests are the foundation of the program’. (1.1.2)

Individual Planning Cycle Indicators

The purpose of the Individual Planning Cycle Indicators is to easily monitor the linking between observations and experiences for each child in the service. This linking of observations to experiences being observed, and experiences to the preceding observations, is critical in ensuring each child’s ‘each child’s learning and development is assessed or evaluated as part of an ongoing cycle of observation, analysing learning documentation, planning, implementation and reflection.’ (1.3.1)

One complete planning cycle is registered when a child is included in an observation, then that child is included in a planned experience linked to that observation (as preceding observation), and that child is included in an observation of that experience (as experience observed). So observation>experience>observation. Two complete planning cycles would be: observation>experience>observation>experience>observation.

Critical Reflection and Professional Collaboration Indicators

The purpose of the Critical Reflectional and Professional Collaboration Indicators, is to make it easy for the leadership team to monitor staff engagement in critical reflection.

As well as showing the number of reflections of pedagogy, the percentage that include manager feedback and educator responses to that feedback is also shown. The manager feedback and educator response are important, as they indicate educators are not just critically reflecting, but that reflection is resulting in change or improvement in practice. The manager feedback provides the leadership team with the opportunity to support and guide educators, while the educator response provides educators with the space to reflect on any change they have made because of the feedback.

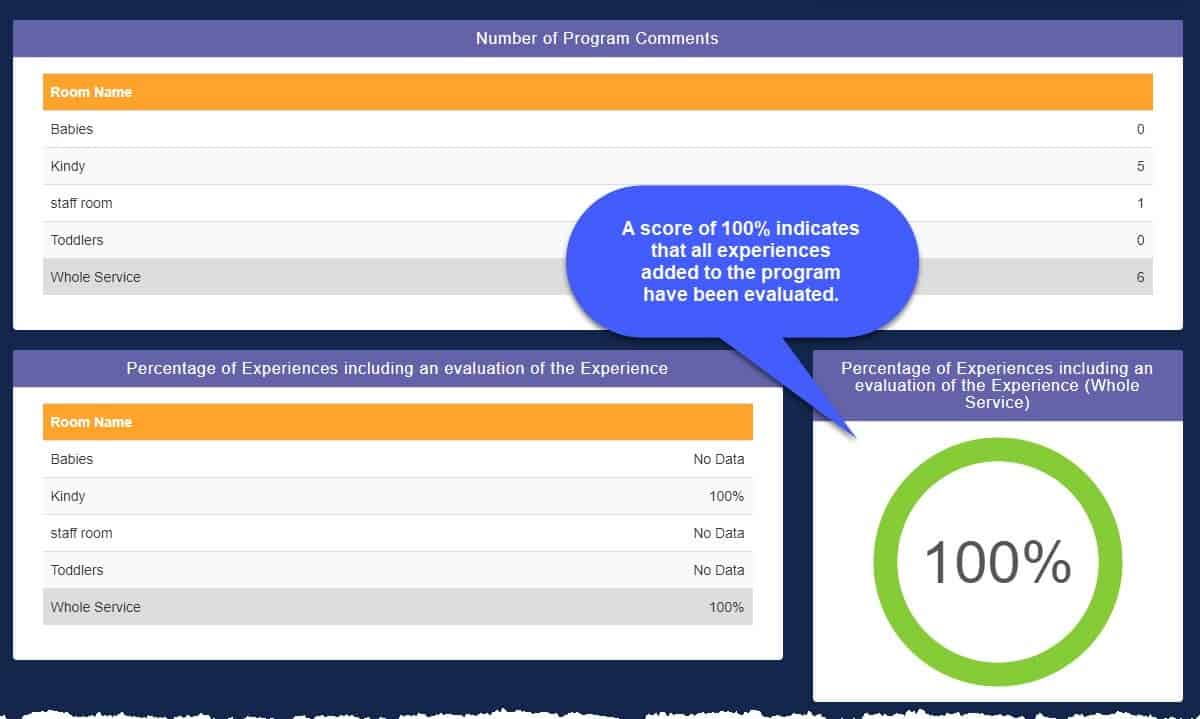

The number of program comments can be an indicator of the extent to which Educators are collaborating and reflecting as a team. Program comments provides a space for educators to document discussions that might otherwise happen in a rush, and then be forgotten. Educators in a room can add comments for each program, and they can also respond to comments. Not only is this evidence of critical reflection (1.3.2) but also professional collaboration (4.2.1) and professionalism (4.2).

By monitoring the percentage of experiences that include an evaluation of the experience, the leadership team can see the extent to which educators are critically evaluating the effectiveness of experiences added to the program.

Educators might use this space to reflect on the effectiveness of the experience in achieving the intended outcomes, any unexpected outcomes that were achieved, what worked, and what they would change next time. Like the program comments, evaluations of experiences can provide evidence of critical reflection, professional collaboration and professionalism, as well as responsiveness, teaching and scaffolding (1.2.2) and assessment and planning cycle (1.3.1).

Important Note: These indicators are not a measure of the quality of the actual documentation, just that the main elements of documentation have been completed.